In the modern digital landscape, the advent of containerization revolutionized how applications are packaged and deployed. Yet, managing hundreds or thousands of these isolated, portable units across diverse environments quickly introduced a new layer of complexity. Enter Kubernetes, the open-source container orchestration platform that has swiftly become the de facto standard for deploying, scaling, and managing containerized workloads. Far from being a mere tool, Kubernetes provides a robust, extensible framework that automates critical operational tasks, ensuring high availability, resilience, and efficiency for applications ranging from microservices to monolithic giants. It truly acts as the conductor of the cloud-native orchestra, asserting its dominance in orchestrating everything in today’s intricate digital ecosystems.

The Container Revolution and Its Orchestral Need

To fully grasp the transformative power of Kubernetes, it’s essential to understand the problem it solves: the inherent complexity of managing containerized applications at scale.

A. The Genesis of Containerization

Before containers, deploying applications was often a cumbersome process fraught with dependency conflicts and environmental inconsistencies.

- Traditional Deployment (Bare Metal/VMs): Applications were deployed directly onto physical servers or virtual machines (VMs). This often led to ‘dependency hell,’ where an application worked on one machine but failed on another due to differing library versions, operating system configurations, or environmental variables. VMs solved some isolation issues but carried significant overhead, as each VM required its own guest OS.

- The Docker Breakthrough: Docker popularized containerization by providing a lightweight, portable, and isolated environment for applications. A container packages an application and all its dependencies (libraries, frameworks, configurations) into a single, runnable unit. This ensured that an application would run consistently across any environment that supported Docker, from a developer’s laptop to a production server.

- Benefits of Containers:

- Portability: Run anywhere consistently.

- Isolation: Applications are isolated from each other and the host system.

- Efficiency: Lighter weight than VMs, sharing the host OS kernel, leading to faster startup times and better resource utilization.

- Agility: Faster development, testing, and deployment cycles.

B. The Challenge of Container Sprawl

While Docker solved the ‘it works on my machine’ problem, it quickly introduced a new challenge: how do you manage hundreds, thousands, or even tens of thousands of containers? This is where container orchestration became a necessity.

- Scaling Containers: How do you run multiple instances of a container to handle increased traffic? How do you distribute them across a cluster of servers?

- Healing Containers: What happens if a container crashes or a server fails? How do you automatically restart failed containers or migrate them to healthy servers?

- Networking Containers: How do containers communicate with each other, especially across different hosts? How do you expose them to the outside world?

- Storage for Containers: How do you manage persistent data for containers that are inherently ephemeral?

- Load Balancing: How do you distribute incoming requests evenly among multiple instances of a containerized application?

- Rolling Updates and Rollbacks: How do you update an application with zero downtime? What if a new version introduces a bug and you need to revert quickly?

- Resource Management: How do you ensure containers don’t consume too many resources, and how do you optimize resource utilization across the cluster?

Manually handling these tasks for a large number of containers is impossible, leading to the urgent need for an automated orchestration layer.

Kubernetes: The Orchestrator’s Core Principles

Kubernetes (often abbreviated as K8s) emerged from Google’s internal ‘Borg’ system, designed to manage their vast fleet of containers. It’s built on a set of powerful principles that enable robust, self-healing, and scalable application deployments.

A. Declarative Configuration and Desired State

A cornerstone of Kubernetes is its reliance on declarative configuration. Instead of telling Kubernetes how to do something, you tell it what the desired state of your application and infrastructure should be.

- YAML/JSON Definitions: You define your applications (e.g., number of replicas, container images, resource limits, network policies) in YAML or JSON files.

- Control Plane: The Kubernetes control plane (kube-controller-manager, kube-scheduler, cloud-controller-manager) continuously monitors the actual state of the cluster and compares it to your desired state.

- Self-Healing: If there’s a discrepancy (e.g., a container crashes, a server fails), Kubernetes automatically takes action to bring the cluster back to the desired state, making it inherently self-healing and highly resilient.

This declarative approach simplifies management, increases consistency, and enables robust automation.

B. Immutability and Idempotency

Kubernetes heavily leverages the concepts of immutable infrastructure and idempotency.

- Immutable Containers: Once a container image is built, it’s never modified. If a change is needed, a new image is built, and new containers are deployed, replacing the old ones. This eliminates ‘configuration drift’ and makes deployments more predictable.

- Idempotent Operations: Kubernetes operations are designed to be idempotent. Applying the same configuration multiple times will always result in the same desired state, without unintended side effects. This is crucial for automation and self-healing.

C. Microservices-Friendly Architecture

While Kubernetes can run monolithic applications, its architecture is particularly well-suited for microservices, due to its emphasis on independent, decoupled components.

- Service Discovery: Kubernetes provides built-in service discovery, allowing microservices to easily find and communicate with each other without hardcoding IP addresses.

- Load Balancing: Services automatically get load balancing across their multiple instances.

- Independent Scaling: Individual microservices (or groups of containers) can be scaled independently based on their specific demand, optimizing resource utilization.

- Fault Isolation: The containerized nature, combined with Kubernetes’ self-healing, means the failure of one microservice is less likely to cascade and affect the entire application.

D. Extensibility via APIs and Custom Resources

Kubernetes is highly extensible, allowing users to tailor it to their specific needs.

- API-Driven: All Kubernetes operations are exposed via a rich API. This allows for programmatic interaction and integration with other tools and systems.

- Custom Resource Definitions (CRDs): Users can define their own custom resources, extending the Kubernetes API to manage unique application components or infrastructure elements as native Kubernetes objects. This enables powerful automation and integration (e.g., using Operators).

- Operators: Software extensions that automate operational tasks for specific applications (e.g., managing a database cluster). Operators leverage CRDs to define custom resources and encapsulate application-specific operational knowledge.

E. Abstraction of Infrastructure

Kubernetes abstracts away much of the underlying infrastructure complexity, allowing developers and operators to focus on the application layer.

- Node Management: Kubernetes handles scheduling containers onto available worker nodes (servers) and manages node health, abstracting away the need for manual server provisioning for each container.

- Network Abstraction: It provides an abstract networking model, allowing containers to communicate across nodes without worrying about underlying IP addresses or network topologies.

- Storage Abstraction: Kubernetes provides a flexible storage layer that allows containers to access various types of persistent storage without direct knowledge of the underlying storage system (e.g., cloud block storage, network file systems).

This abstraction layer simplifies deployment and management across diverse environments, from on-premise data centers to public clouds.

Key Components of the Kubernetes Architecture

A Kubernetes cluster is composed of several interconnected components, working in harmony to manage containerized workloads.

A. The Control Plane (Master Node Components)

The control plane is the brain of the Kubernetes cluster, managing the worker nodes and the workloads running on them.

- Kube-API Server: The front-end of the Kubernetes control plane. It exposes the Kubernetes API, which is used by all internal components and external users (via

kubectl). It processes REST requests, validates them, and updates the state in etcd. - etcd: A highly available, consistent, and distributed key-value store. It serves as Kubernetes’ backing store for all cluster data, configurations, and state. All cluster information is stored here.

- Kube-Scheduler: Watches for newly created Pods that have no assigned node, and selects a node for them to run on. The scheduler takes into account resource requirements, hardware constraints, policy constraints, affinity and anti-affinity specifications, and data locality.

- Kube-Controller-Manager: Runs controller processes. A controller watches the shared state of the cluster through the API server and makes changes to move the current state towards the desired state. Examples include the Node controller (notifies if nodes go down), Replication controller (maintains desired number of Pods), and Endpoints controller (joins Services and Pods).

- Cloud-Controller-Manager (Optional): Integrates with underlying cloud provider APIs (e.g., AWS, Azure, GCP) to manage cloud-specific resources like load balancers, managed disks, and network routes.

B. Worker Nodes (Data Plane Components)

Worker nodes (or ‘minions’) are where the actual containerized applications run.

- Kubelet: An agent that runs on each worker node. It communicates with the control plane’s API server, receives Pod specifications, and ensures that the containers described in the Pods are running and healthy on its node. It’s the primary “node agent.”

- Kube-Proxy: A network proxy that runs on each node. It maintains network rules on nodes, enabling network communication to your Pods from outside or inside your cluster. It handles service discovery and load balancing for services.

- Container Runtime: The software responsible for running containers. Docker was historically popular, but Kubernetes now supports other runtimes that adhere to the Container Runtime Interface (CRI) specification (e.g., containerd, CRI-O).

- Pod: The smallest deployable unit in Kubernetes. A Pod is an abstraction that represents a single instance of a running process in a cluster. It can contain one or more containers (tightly coupled applications that share storage and network resources) and shared resources for them.

C. Key Abstractions (Objects)

Kubernetes uses various abstractions (objects) to represent the desired state of your applications and the cluster.

- Service: An abstract way to expose an application running on a set of Pods as a network service. It defines a logical set of Pods and a policy by which to access them (e.g., a single stable IP address and DNS name for a set of web servers). Services can be exposed internally or externally (LoadBalancer, NodePort).

- Deployment: A higher-level abstraction used for managing stateless applications. A Deployment provides declarative updates for Pods and ReplicaSets. You describe a desired state in a Deployment, and the Deployment Controller changes the actual state to the desired state at a controlled rate (e.g., rolling updates).

- ReplicaSet: Ensures that a specified number of Pod replicas are running at any given time. A Deployment typically manages a ReplicaSet.

- Namespace: A way to divide cluster resources among multiple users or teams. Namespaces provide a scope for names and prevent naming collisions. They are a logical separation within a cluster.

- Ingress: An API object that manages external access to services within the cluster, typically HTTP/S. It provides HTTP routing based on hostnames or URL paths, handling termination of SSL/TLS.

- ConfigMap and Secret: Used to inject configuration data (ConfigMap) or sensitive data (Secret – e.g., passwords, API keys) into Pods, separating configuration from application code.

- PersistentVolume (PV) and PersistentVolumeClaim (PVC): Abstractions for managing storage. PVs are pre-provisioned pieces of storage in the cluster, while PVCs are requests for storage by users, allowing containers to consume storage without knowing the underlying infrastructure details.

Transformative Advantages of Kubernetes

The adoption of Kubernetes brings a wealth of benefits that address many of the limitations of traditional and even basic container management, leading to significant operational and development advantages.

A. Unparalleled Scalability and Elasticity

Kubernetes excels at automatically managing and scaling containerized applications to meet fluctuating demand.

- Automated Scaling: The Horizontal Pod Autoscaler (HPA) automatically scales the number of Pod replicas up or down based on observed CPU utilization or custom metrics, ensuring applications perform optimally during traffic spikes.

- Resource Optimization: Kubernetes intelligently schedules Pods onto available nodes, optimizing resource utilization across the cluster. If a node becomes overloaded, Pods can be rescheduled.

- Efficient Resource Allocation: You can define resource requests and limits for containers (CPU, memory), allowing Kubernetes to ensure that applications get the resources they need while preventing any single application from consuming all cluster resources.

- Global Reach: With managed Kubernetes services in public clouds, you can easily deploy and scale your applications across multiple geographical regions, ensuring low latency for global user bases.

B. Enhanced Resilience and Self-Healing Capabilities

One of Kubernetes’ most powerful features is its built-in ability to self-heal and maintain the desired state, significantly improving application availability.

- Automated Restarts: If a container or Pod crashes, Kubernetes automatically detects the failure and restarts it.

- Node Failure Handling: If an entire worker node fails, Kubernetes automatically reschedules its Pods to healthy nodes in the cluster.

- Health Checks: Liveness and readiness probes monitor application health, automatically restarting unhealthy containers or preventing traffic from being routed to unready ones.

- Rollbacks: If a new deployment introduces issues, Kubernetes can automatically or manually roll back to a previous, stable version with minimal downtime.

C. Accelerated Development and Deployment Cycles (CI/CD)

Kubernetes simplifies the deployment process, allowing for faster and more frequent releases.

- Declarative Deployments: Defining application states in YAML files makes deployments consistent and repeatable.

- Rolling Updates: Kubernetes enables zero-downtime rolling updates, gradually replacing old versions of Pods with new ones, ensuring continuous availability during deployments.

- Simplified Orchestration: Developers can focus on writing code, knowing that Kubernetes will handle the complexities of scheduling, scaling, and managing their applications in production.

- GitOps Integration: By integrating with Git (IaC for Kubernetes manifests), teams can leverage familiar developer workflows for managing infrastructure and deployments, promoting a unified CI/CD pipeline.

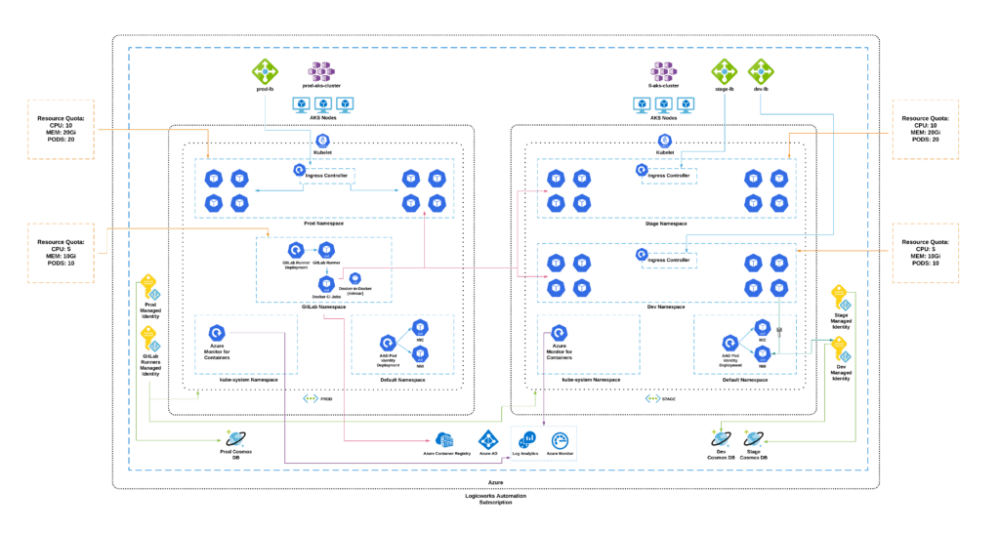

D. Portability Across Environments (Hybrid & Multi-Cloud)

Kubernetes provides a consistent operating environment for containerized applications, regardless of the underlying infrastructure.

- On-Premise to Cloud: Applications running on Kubernetes on-premise can be easily migrated to managed Kubernetes services in public clouds (AWS EKS, Azure AKS, Google GKE).

- Multi-Cloud Strategy: Enterprises can deploy applications across multiple cloud providers, avoiding vendor lock-in and leveraging the unique strengths or cost advantages of different clouds.

- Edge Computing: Kubernetes distributions can run on lightweight hardware at the edge, extending cloud-native practices to IoT and low-latency environments.

- Unified Management Plane: A single set of tools and practices (Kubernetes APIs,

kubectl) can be used to manage applications across diverse environments, simplifying operations.

E. Resource Efficiency and Cost Optimization

By optimizing resource utilization and providing granular control, Kubernetes can lead to significant cost savings.

- Bin Packing: Kubernetes efficiently packs Pods onto worker nodes, maximizing the utilization of underlying server resources.

- Right-Sizing: The ability to specify precise CPU and memory requests/limits helps ensure that applications get what they need without over-provisioning, leading to less wasted resources.

- Pay-as-You-Go for Managed Services: When using managed Kubernetes services, you only pay for the compute resources consumed, optimizing cloud spending.

- Reduced Operational Overhead: Automation of many operational tasks (scaling, healing, scheduling) reduces the need for extensive manual intervention, freeing up valuable engineering time.

F. Thriving Ecosystem and Community

Kubernetes benefits from a massive, active open-source community and a rich ecosystem of tools and integrations.

- Vast Tooling: A wide array of third-party tools, plugins, and services extend Kubernetes’ capabilities (e.g., service meshes, monitoring, logging, security tools).

- Strong Community Support: Extensive documentation, online forums, and a vibrant community provide ample resources for learning, troubleshooting, and collaboration.

- Industry Standard: Its status as the industry standard provides stability, attracts talent, and ensures long-term support and innovation.

Challenges and Considerations in Kubernetes Adoption

While Kubernetes offers compelling advantages, its adoption is not without its complexities. Organizations must be prepared to navigate these challenges for successful implementation.

A. Steep Learning Curve and Operational Complexity

Kubernetes is a powerful but complex system. Its distributed nature, extensive API, and numerous concepts (Pods, Deployments, Services, Namespaces, Ingress, etc.) present a steep learning curve for new users and teams.

- Abstraction Overheads: While abstracting infrastructure, understanding how Kubernetes manages networking, storage, and scheduling requires significant effort.

- Debugging Distributed Systems: Debugging issues in a highly distributed Kubernetes environment, where applications might span multiple Pods and nodes, is inherently more complex than debugging a monolithic application.

- Operational Maturity: Operating Kubernetes in production requires mature DevOps practices, robust monitoring, logging, and alerting systems, and specialized SRE (Site Reliability Engineering) skills.

B. Resource Consumption of the Control Plane

While efficient for workloads, the Kubernetes control plane itself requires dedicated compute and memory resources. For very small deployments or edge scenarios, this control plane overhead can be significant relative to the application being run, making it potentially inefficient for minimal workloads.

C. Storage Management Challenges for Stateful Applications

While Kubernetes offers Persistent Volumes (PVs) and Persistent Volume Claims (PVCs), managing persistent storage for stateful applications (e.g., databases) within Kubernetes can be more complex than for stateless ones.

- StatefulSets: While StatefulSets help manage stateful applications, ensuring data integrity, backups, and disaster recovery for distributed databases within a dynamic container environment requires careful planning and specialized solutions (e.g., database Operators).

- Storage Provisioning: Integrating with different types of storage (cloud-provider specific, on-premise SAN/NAS) and ensuring performant, highly available storage can be challenging.

D. Networking Complexity

Kubernetes networking is a sophisticated layer of abstraction that can be challenging to understand and troubleshoot.

- Container Network Interface (CNI): Different CNI plugins offer varying features and performance characteristics, and choosing the right one requires careful consideration.

- Network Policies: Defining granular network policies to control communication between Pods and Services, while crucial for security, can be complex to configure and manage.

- Ingress and Load Balancing: Correctly configuring Ingress controllers, external load balancers, and DNS for applications exposed to the internet requires expertise.

E. Security and Compliance

While Kubernetes offers robust security features, misconfigurations can lead to significant vulnerabilities.

- RBAC Complexity: Role-Based Access Control (RBAC) in Kubernetes is powerful but can be complex to configure correctly, ensuring least privilege access.

- Pod Security: Ensuring Pods run with appropriate security contexts and limits is crucial.

- Vulnerability Management: Scanning container images, managing secrets, and securing the Kubernetes API server itself are continuous security challenges that need dedicated tooling and processes.

- Compliance Auditing: Auditing Kubernetes clusters against regulatory compliance standards requires specialized tools and expertise.

F. Cost Management

While Kubernetes can optimize resource utilization, unchecked scaling or inefficient cluster design can lead to unexpected cloud costs.

- Node Sizing: Choosing the correct size and number of worker nodes to efficiently run Pods without waste.

- Managed Service Costs: While convenient, managed Kubernetes services have associated costs that need to be factored in.

- Egress Traffic: Data transfer costs, especially for inter-region traffic or large egress, can be significant.

- Resource Limits/Requests: Properly setting resource limits and requests for Pods is crucial for efficient scheduling and preventing resource starvation or waste.

Best Practices for Mastering Kubernetes Orchestration

To truly master Kubernetes and unlock its full potential, organizations should adopt a strategic, disciplined, and automated approach, focusing on operational excellence and continuous improvement.

A. Embrace a Cloud-Native Mindset and Culture

Successful Kubernetes adoption requires a shift in thinking across the organization. Foster a cloud-native mindset that embraces immutable infrastructure, microservices, automation, and a DevOps culture where developers and operations teams collaborate closely. Encourage continuous learning and experimentation.

B. Start Small and Iterate Incrementally

Avoid trying to migrate everything to Kubernetes at once. Begin with small, non-critical applications or new greenfield projects. Learn from these initial deployments, gather operational experience, and then gradually expand the scope of Kubernetes adoption. This incremental approach reduces risk and allows teams to build expertise.

C. Automate Everything with GitOps

Leverage the declarative nature of Kubernetes by adopting GitOps. Store all Kubernetes configuration files (YAML manifests) in a Git repository as the single source of truth. Automate the deployment process using CI/CD pipelines (e.g., Argo CD, Flux CD) that automatically sync the cluster state with the Git repository. This enables consistent, auditable, and repeatable deployments.

D. Implement Robust Observability (Monitoring, Logging, Tracing)

You cannot manage or scale what you cannot see. Implement comprehensive observability for your Kubernetes clusters and applications.

- Monitoring: Collect metrics on cluster health, node utilization, Pod performance, and application-specific KPIs using tools like Prometheus and Grafana.

- Logging: Centralize logs from all Pods and cluster components (e.g., using an ELK stack, Grafana Loki, or cloud-native logging services) for effective troubleshooting and auditing.

- Tracing: Implement distributed tracing (e.g., OpenTelemetry, Jaeger) to visualize request flows across microservices running in Kubernetes, identifying bottlenecks and failures.

E. Design for Resilience and Fault Tolerance

Leverage Kubernetes’ built-in resilience features and augment them with best practices.

- Liveness and Readiness Probes: Configure these probes for all your applications to ensure Kubernetes can automatically manage Pod health.

- Pod Disruption Budgets: Define PDBs to ensure a minimum number of Pods are always running, even during voluntary disruptions (e.g., node maintenance).

- Anti-Affinity Rules: Configure anti-affinity to ensure critical application components are distributed across different nodes, racks, or even availability zones for higher availability.

- Graceful Shutdowns: Design applications to gracefully shut down, allowing Kubernetes to remove them from service and drain connections before termination.

F. Master Kubernetes Networking and Ingress

Understand and strategically design your Kubernetes networking layer.

- Choose the Right CNI Plugin: Select a CNI plugin that meets your networking, security, and performance requirements (e.g., Calico for network policy, Cilium for eBPF-based networking).

- Implement Network Policies: Use Kubernetes Network Policies to define granular firewall rules at the Pod level, isolating application components and enhancing security.

- Leverage Ingress Controllers and Service Meshes: Use Ingress controllers (e.g., Nginx Ingress, Traefik) for external access and consider a service mesh (e.g., Istio, Linkerd) for advanced traffic management, security, and observability for microservices.

G. Secure Your Cluster and Applications (DevSecOps)

Security must be an integral part of your Kubernetes strategy.

- RBAC and Least Privilege: Implement strict Role-Based Access Control (RBAC) to ensure users and applications only have the minimum necessary permissions.

- Container Image Security: Regularly scan container images for known vulnerabilities. Use trusted base images and implement supply chain security best practices.

- Network Segmentation: Use Network Policies to create strong segmentation between different workloads.

- Secret Management: Use Kubernetes Secrets in conjunction with external secret management solutions (e.g., HashiCorp Vault) to protect sensitive data.

- Audit Logging: Enable and review audit logs from the Kubernetes API server for suspicious activities.

H. Optimize Resource Allocation and Costs

Efficiently manage your cluster resources to optimize performance and control costs.

- Resource Requests and Limits: Accurately define CPU and memory requests and limits for all your containers. This helps Kubernetes schedule Pods effectively and prevents resource starvation or waste.

- Cluster Autoscaling: Implement cluster autoscaling to automatically adjust the number of worker nodes based on Pod demand, optimizing cloud infrastructure costs.

- Spot/Preemptible Instances: For fault-tolerant, stateless workloads, consider using cheaper spot or preemptible instances in conjunction with robust anti-affinity rules.

- FinOps Practices: Integrate financial operations (FinOps) principles, continuously monitoring and optimizing cloud spending related to your Kubernetes clusters.

I. Plan for Persistent Storage and Disaster Recovery

For stateful applications, meticulous planning for storage and disaster recovery is essential.

- Persistent Storage Solutions: Choose appropriate Persistent Volume (PV) solutions (e.g., cloud-provider specific block storage, shared file systems, distributed storage solutions like Ceph) that meet your application’s performance and availability needs.

- Database Operators: For running databases in Kubernetes, leverage database-specific Operators that automate complex operational tasks like backup, restore, and upgrades.

- Backup and Restore Strategy: Implement robust backup and restore procedures for both application data and Kubernetes cluster state (etcd).

- Multi-Cluster/Multi-Region Deployments: For critical applications, plan for multi-cluster or multi-region deployments to ensure high availability and disaster recovery.

The Future Trajectory of Kubernetes Orchestration

Kubernetes is not a static technology; it’s a rapidly evolving ecosystem that continues to push the boundaries of cloud-native computing. Several exciting trends are poised to define its future.

A. Ubiquitous Deployment and Abstraction

Kubernetes will become an even more ubiquitous abstraction layer, running across all environments.

- Edge Kubernetes: Lightweight Kubernetes distributions will become standard for managing containerized workloads at the network edge (IoT devices, factory floors, retail locations), extending cloud-native practices closer to data sources.

- Serverless Kubernetes (K8s as a Service): Managed services will further abstract away the underlying Kubernetes control plane, making it even easier to consume Kubernetes resources without managing the cluster itself (e.g., AWS Fargate, Azure Container Apps based on K8s).

- Mainframe/Legacy Modernization: Kubernetes may play an increasing role in modernizing monolithic applications running on traditional mainframes or legacy infrastructure, acting as a consistent deployment target.

B. Enhanced AI and Machine Learning Workloads

Kubernetes is increasingly becoming the platform of choice for AI/ML workloads.

- GPU/Accelerator Management: Improved native support and scheduling for GPUs and other AI accelerators, making it easier to deploy and scale machine learning training and inference jobs.

- MLOps on Kubernetes: The ecosystem for MLOps (Machine Learning Operations) on Kubernetes (e.g., Kubeflow, MLflow) will mature, providing robust tools for managing the entire ML lifecycle, from data prep to model deployment and monitoring.

- AI for Kubernetes Management: AI and machine learning will be used to optimize Kubernetes itself—predicting resource needs, optimizing scheduling, and even automating troubleshooting and self-healing at a higher level.

C. Deeper Integration with Service Mesh

Service meshes (e.g., Istio, Linkerd, Cilium) will become an even more integral part of Kubernetes deployments, particularly for microservices.

- Automated Traffic Management: Advanced routing, load balancing, canary deployments, and A/B testing will become standard, simplifying complex deployment strategies.

- Zero-Trust Security: Service meshes will provide a powerful layer for implementing fine-grained network policies, mutual TLS (mTLS), and identity-based access control between services, enforcing a zero-trust security model.

- Enhanced Observability: Built-in tracing, metrics, and logging within the mesh will provide unparalleled visibility into inter-service communication, simplifying debugging and performance analysis in distributed applications.

D. Multi-Cluster and Federation Management

As organizations deploy Kubernetes across multiple clusters (e.g., for multi-cloud, hybrid cloud, or disaster recovery), the need for effective multi-cluster management will intensify.

- Federation APIs: Native Kubernetes federation APIs or third-party tools will mature, allowing for centralized management, policy enforcement, and workload distribution across multiple clusters.

- Cross-Cluster Networking: Solutions for seamless, secure networking between geographically dispersed Kubernetes clusters will become more robust.

- Global Load Balancing: Advanced global load balancing and traffic management across multiple clusters for ultimate resilience and performance.

E. Enhanced Security and Policy Enforcement

Security will continue to be a paramount focus, with more native and integrated solutions.

- Native Supply Chain Security: Kubernetes will incorporate more native features for software supply chain security, including automated SBOM (Software Bill of Materials) generation and integrity checks for container images and deployments.

- Advanced Policy Engines: Policy as Code engines (e.g., OPA Gatekeeper) will become more powerful and widely adopted, enforcing granular security and compliance policies across the entire cluster lifecycle.

- Confidential Computing: Integration with confidential computing technologies will enable workloads to run in hardware-isolated, encrypted environments, providing enhanced security for sensitive data and applications in public cloud Kubernetes clusters.

Conclusion

Kubernetes has firmly established itself as the orchestrator of choice for the cloud-native era. It has moved beyond merely managing containers to providing a comprehensive platform that automates deployment, scaling, and management of complex application landscapes. By embracing declarative configuration, self-healing capabilities, and a thriving ecosystem, Kubernetes empowers organizations to build and deliver applications with unprecedented speed, resilience, and efficiency.

While its initial learning curve and inherent complexity demand significant investment in skills and operational maturity, the benefits of standardizing on Kubernetes are profound. It enables true application portability across diverse infrastructure, optimizes resource utilization, and fundamentally transforms how development and operations teams collaborate. The future trajectory of Kubernetes points towards even greater abstraction, deeper integration with AI/ML, enhanced security, and seamless management of multi-cluster environments. It is not just a technology; it is a foundational shift in how we build and run modern software. Indeed, by effectively leveraging Kubernetes, enterprises are truly making it orchestrate everything in their pursuit of digital excellence.