In the relentless pursuit of insights and innovation, modern enterprises grapple with an unprecedented deluge of information. This era of hyperscale data — characterized by volumes, velocities, and varieties that defy traditional processing methods — is simultaneously a challenge and an immense opportunity. At the forefront of taming this digital torrent and extracting its true value is Artificial Intelligence (AI). By applying sophisticated algorithms and machine learning models, AI is fundamentally transforming how organizations collect, store, process, analyze, and ultimately derive intelligence from massive datasets. This isn’t just an incremental improvement; it’s a revolutionary symbiotic relationship where AI powers the very infrastructure and analytical capabilities needed to manage hyperscale data, thereby unlocking unprecedented business advantage, strategic foresight, and operational efficiency. AI doesn’t just process data; it breathes life into the potential hidden within vast information oceans.

The Data Deluge: Understanding Hyperscale Challenges

To fully grasp the critical role AI plays in managing hyperscale data, it’s essential to first comprehend the sheer magnitude and inherent complexities of this modern data landscape. Traditional approaches simply crumble under the weight.

A. The Unprecedented Scale of Modern Data

The term “hyperscale” is not merely hyperbole; it refers to data volumes that transcend terabytes and petabytes, rapidly moving into exabytes and beyond. This explosion is driven by various sources:

- Ubiquitous Sensors and IoT: Billions of connected devices, from industrial sensors and smart city infrastructure to wearable tech and autonomous vehicles, continuously generate vast streams of real-time data, often at high velocities. This includes telemetry, environmental readings, positional data, and operational parameters.

- Digital Interactions and E-commerce: Every online click, transaction, search query, social media post, and streaming session contributes to an ever-growing repository of user behavior data. E-commerce platforms, social networks, and content providers manage immense interaction logs.

- Scientific Research and Healthcare: Fields like genomics, astrophysics, climate modeling, and medical imaging produce petabytes of complex, high-dimensional data that require specialized handling and analysis. Electronic health records and medical IoT devices also contribute significantly.

- Enterprise Systems: Modern businesses operate complex systems (ERP, CRM, SCM) that generate enormous transactional and operational data across all functions, from manufacturing to customer service.

- Multimedia Content: The proliferation of high-definition video, high-resolution images, and interactive 3D content significantly adds to data volumes, often requiring specialized storage and processing for rich media analytics.

B. The Challenges of Hyperscale Data Management

Beyond sheer volume, hyperscale data presents intricate challenges in its velocity, variety, and veracity, making traditional data management approaches obsolete.

- Velocity: Real-time Demands: Data isn’t just large; it’s generated and needs to be processed at extreme speeds. Real-time analytics, fraud detection, autonomous systems, and dynamic pricing require immediate insights, rendering batch processing inadequate.

- Variety: Diverse Formats and Sources: Hyperscale data comes in a myriad of formats – structured (databases), semi-structured (JSON, XML), and unstructured (text, images, video, audio). Integrating, cleaning, and transforming this diverse data for analysis is a monumental task.

- Veracity: Trust and Quality: The sheer volume and disparate sources make it difficult to ascertain data quality, accuracy, and trustworthiness. Inaccurate data leads to flawed insights and poor decision-making, emphasizing the need for robust data governance.

- Storage and Infrastructure Costs: Storing exabytes of data requires massive, scalable infrastructure. Traditional storage solutions become prohibitively expensive, leading to a reliance on cloud-native object storage and specialized data lakes.

- Processing Power and Latency: Analyzing vast datasets, especially for complex analytical models or AI training, demands immense computational power. Traditional CPUs often struggle, leading to bottlenecks and high latency.

- Security and Compliance: Managing and securing hyperscale data across distributed environments introduces complex challenges related to data privacy (e.g., GDPR, CCPA), access control, encryption, and regulatory compliance across multiple jurisdictions.

- Talent Gap: The specialized skills required to manage, process, and extract value from hyperscale data (data engineers, MLOps specialists, cloud architects) are in high demand and short supply.

These interwoven challenges highlight the indispensable role of AI as a transformative force, enabling organizations to not just cope with, but thrive amidst, the data deluge.

AI as the Enabler: Powering Hyperscale Data Capabilities

Artificial Intelligence, particularly its subfields of Machine Learning (ML) and Deep Learning (DL), provides the critical capabilities needed to harness the potential of hyperscale data. AI acts as an intelligent layer that automates, optimizes, and extracts unprecedented insights.

A. Automated Data Ingestion and Preparation

AI significantly streamlines the initial, often labor-intensive, phases of data handling.

- Smart Data Ingestion: AI can intelligently identify data sources, understand data formats (even unstructured text or images), and automate the process of bringing data into data lakes or analytical platforms, reducing manual effort.

- Automated Data Cleaning and Curation: ML algorithms can detect anomalies, identify missing values, correct errors, and de-duplicate records at scale. This automated data cleaning ensures higher data quality and veracity without extensive manual intervention.

- Feature Engineering: AI can assist in or automate the process of feature engineering, transforming raw data into features that are more useful for machine learning models. This is crucial for extracting meaningful patterns from complex datasets.

- Schema Inference: For semi-structured or unstructured data, AI can infer schemas or suggest logical structures, making diverse data sources more amenable to systematic analysis.

B. Intelligent Data Storage and Management

AI optimizes how hyperscale data is stored, categorized, and accessed, leading to cost savings and improved performance.

- Automated Data Tiering: AI can analyze data access patterns and automatically move data to the most cost-effective storage tier (e.g., from hot, high-performance storage to cold, archival storage) based on its usage frequency, optimizing storage costs.

- Data Deduplication and Compression: AI algorithms can identify redundant data blocks and apply advanced compression techniques, minimizing storage footprint and reducing associated costs.

- Smart Indexing and Search: AI-powered indexing and search capabilities allow users to quickly find relevant data within massive data lakes, even when the data variety is high, by understanding context and relationships.

- Data Governance and Compliance Automation: AI can monitor data access patterns, detect potential policy violations (e.g., unauthorized access to sensitive data), and automate compliance checks, ensuring adherence to data privacy regulations at scale.

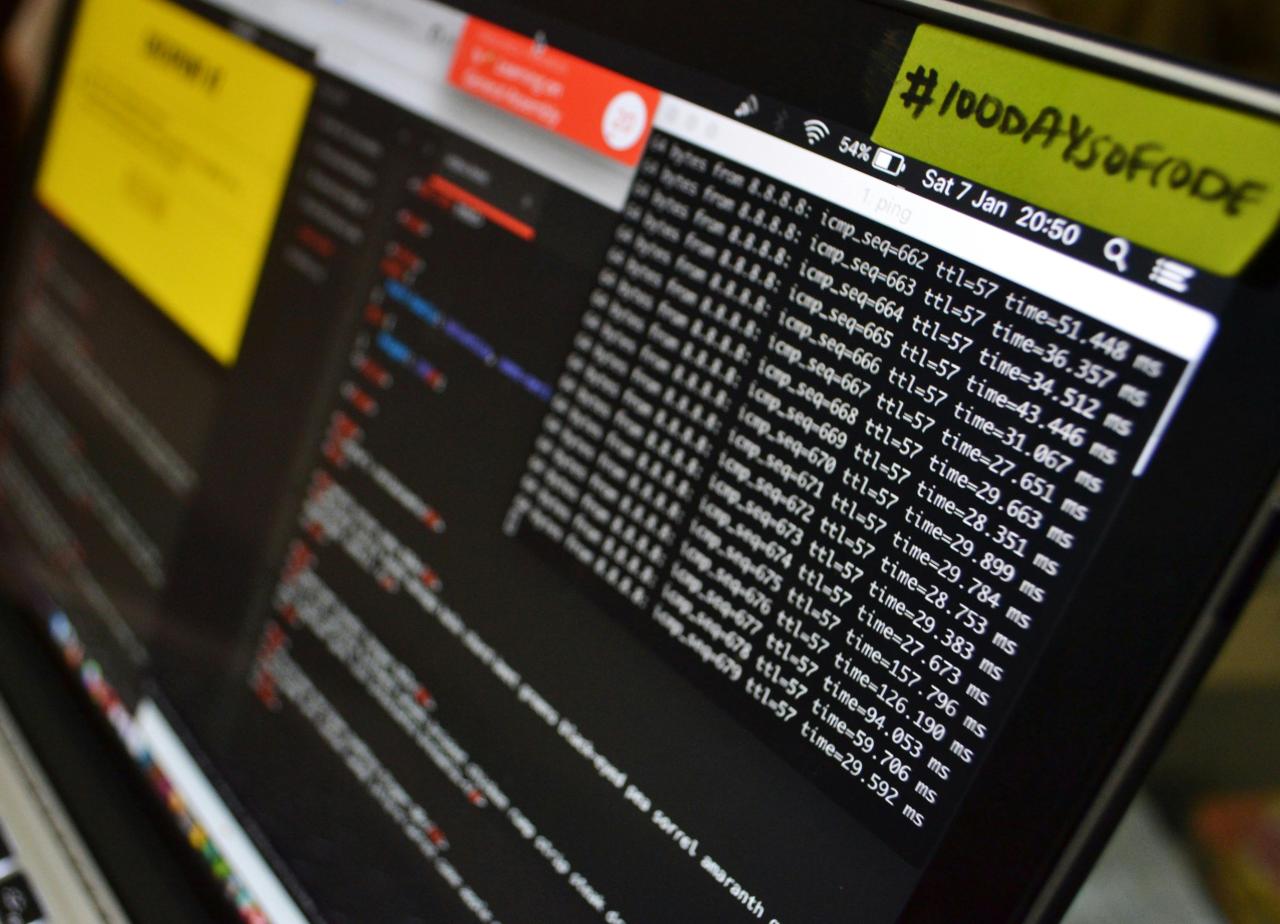

C. Accelerated Data Processing and Analytics

AI provides the computational horsepower and algorithmic intelligence to process and analyze hyperscale data with unparalleled speed and depth.

- Optimized Query Execution: AI can learn from past query performance to optimize future query plans, dynamically adjusting resource allocation and execution strategies to achieve faster results on massive datasets.

- Real-time Stream Processing: ML models can be embedded directly into streaming data pipelines to perform real-time analytics, anomaly detection, and predictive modeling on data as it arrives (e.g., fraud detection, IoT analytics, network intrusion detection).

- Pattern Recognition and Anomaly Detection: AI excels at identifying subtle patterns, correlations, and anomalies within vast datasets that would be impossible for humans to spot, revealing hidden insights (e.g., identifying unusual transactions, predicting equipment failures).

- Predictive and Prescriptive Analytics: Beyond understanding what happened (descriptive analytics) or what might happen (predictive analytics), AI-powered systems can recommend optimal actions (prescriptive analytics) based on complex data analysis, guiding business decisions.

- Automated Reporting and Visualization: AI can automate the generation of complex reports and interactive visualizations from large datasets, presenting insights in an easily digestible format for business users.

D. Fueling Advanced AI/ML Model Training

Ironically, hyperscale data is not just consumed by AI; it also feeds and trains the most advanced AI/ML models.

- Large Language Models (LLMs): The massive datasets of text and code (trillions of tokens) used to train LLMs require hyperscale data infrastructure. AI itself is then used to manage and curate these colossal training datasets.

- Computer Vision and Speech Recognition: Training sophisticated computer vision models (e.g., for autonomous vehicles, medical imaging) and speech recognition systems requires petabytes of annotated image, video, and audio data. AI-powered tools assist in the annotation and management of these datasets.

- Reinforcement Learning: Training reinforcement learning agents often involves massive simulations and data collection from numerous interactions, all of which benefit from hyperscale data processing capabilities.

- MLOps and Model Lifecycle Management: AI is used within MLOps platforms to automate the deployment, monitoring, retraining, and governance of ML models, ensuring they remain effective and accurate on ever-evolving hyperscale data.

Strategic Advantages of AI-Powered Hyperscale Data

The synergistic relationship between AI and hyperscale data generates profound strategic advantages for organizations, enabling them to navigate complex markets, innovate rapidly, and sustain competitive edge.

A. Unlocking Unprecedented Business Insights

The most significant advantage is the ability to extract deeper, more actionable intelligence from data that was previously too vast or complex to process.

- 360-Degree Customer View: Integrating data from all customer touchpoints (sales, marketing, service, social media) allows AI to create a comprehensive understanding of customer behavior, preferences, and needs, enabling hyper-personalized experiences and targeted marketing.

- Predictive Foresight: AI can predict market trends, consumer demand, equipment failures, financial risks, and supply chain disruptions with remarkable accuracy, allowing businesses to make proactive, data-driven decisions.

- Discovery of Hidden Patterns: AI algorithms can uncover subtle correlations, anomalies, and insights within massive, complex datasets that would be impossible for human analysis, revealing new opportunities or potential threats.

- Accelerated R&D and Innovation: In scientific and engineering domains, AI analyzing hyperscale experimental data or simulation results can accelerate material discovery, drug development, and product innovation by rapidly testing hypotheses and optimizing designs.

B. Enhanced Operational Efficiency and Cost Reduction

AI applied to hyperscale data streamlines operations, minimizes waste, and leads to significant cost savings.

- Automated Decision-Making: AI can automate routine operational decisions (e.g., dynamic pricing, inventory reordering, routing logistics), reducing manual effort and speeding up processes.

- Predictive Maintenance: By analyzing sensor data from industrial equipment, AI predicts potential failures, enabling just-in-time maintenance that reduces unplanned downtime, extends asset lifespan, and optimizes maintenance schedules, leading to massive cost savings.

- Resource Optimization: AI can optimize energy consumption in data centers, manage cloud resource allocation dynamically, and fine-tune operational parameters in manufacturing, leading to significant reductions in operational costs and environmental footprint.

- Fraud Detection and Risk Management: AI analyzes vast transactional data in real-time to detect fraudulent activities, identify credit risks, and flag suspicious patterns, protecting financial assets and minimizing losses.

C. Superior Customer Experiences and Personalization

Leveraging AI to understand hyperscale customer data leads directly to more engaging and satisfying customer interactions.

- Hyper-Personalized Products/Services: AI can recommend products, content, or services tailored to individual customer preferences, purchase history, and real-time behavior, leading to higher conversion rates and customer satisfaction.

- Proactive Customer Service: AI can anticipate customer needs or issues before they arise (e.g., predicting a service outage, a product expiring) and proactively offer solutions or support, improving customer loyalty.

- Intelligent Chatbots and Virtual Assistants: AI-powered chatbots can handle a high volume of customer inquiries, providing instant, personalized responses and escalating complex issues to human agents, improving service efficiency and availability.

D. Increased Resilience and Adaptability

AI-driven insights from hyperscale data enable organizations to be more agile and resilient in the face of change.

- Supply Chain Optimization and Resilience: AI analyzes global supply chain data (weather, geopolitical events, demand fluctuations) to predict disruptions, optimize logistics, and suggest alternative sourcing strategies, building more resilient supply chains.

- Real-time Anomaly Detection: AI continuously monitors vast data streams to detect unusual patterns or anomalies that could indicate security breaches, operational failures, or market shifts, enabling rapid response and mitigation.

- Dynamic Market Response: By continuously analyzing market data, social media sentiment, and competitor activity, AI empowers businesses to quickly adapt their strategies, product offerings, and marketing campaigns to changing market conditions.

E. Competitive Differentiation

Organizations that master AI-powered hyperscale data gain a significant competitive edge.

- First-Mover Advantage: The ability to rapidly extract insights from data allows companies to identify and capitalize on new market opportunities faster than competitors.

- Data-Driven Innovation: AI becomes an engine for continuous innovation, enabling the development of new data-centric products, services, and business models.

- Efficiency Leadership: Companies that effectively leverage AI for operational optimization gain a cost advantage and can deliver products or services more efficiently, outcompeting rivals.

Critical Challenges in Implementing AI-Powered Hyperscale Data

While the benefits are immense, harnessing AI for hyperscale data presents complex challenges that require strategic planning, significant investment, and overcoming fundamental hurdles.

A. Data Quality, Governance, and Trust

The sheer volume and variety of hyperscale data exacerbate existing data challenges.

- Data Quality Issues: Inaccurate, inconsistent, incomplete, or biased data will lead to flawed AI models and erroneous insights. Cleaning and validating petabytes of data is a monumental task.

- Data Governance Complexity: Establishing comprehensive data governance frameworks for hyperscale data (data ownership, lineage, quality, access policies) across diverse sources is incredibly complex, especially in hybrid or multi-cloud environments.

- Ethical AI and Bias: AI models trained on biased hyperscale datasets can perpetuate and even amplify societal biases, leading to unfair or discriminatory outcomes. Ensuring ethical AI development and mitigating bias in data and algorithms is a critical challenge.

- Data Privacy and Compliance: Managing sensitive personal data at hyperscale while complying with evolving global privacy regulations (e.g., GDPR, CCPA, Indonesia’s PDP Law) requires robust data anonymization, pseudonymization, and access control mechanisms, which are difficult to implement and enforce at scale.

B. Infrastructure and Computational Demands

Processing and training AI models on hyperscale data requires immense and specialized infrastructure.

- Compute Power and Energy Consumption: Training large AI models (e.g., LLMs) on exabytes of data requires colossal computational resources (GPUs, TPUs) and consumes vast amounts of energy, raising both cost and environmental concerns.

- Storage and Data Movement: Storing and efficiently moving petabytes of data between storage, compute, and memory components is a significant bottleneck. This necessitates high-bandwidth networks, specialized storage solutions (data lakes, lakehouses), and optimized data pipelines.

- Scalability of AI Infrastructure: Building and managing AI infrastructure that can scale dynamically to handle fluctuating hyperscale data workloads (from real-time streams to batch training) requires advanced cloud architecture and MLOps expertise.

C. Talent Shortage and Skill Gap

The specialized expertise required to implement and manage AI-powered hyperscale data solutions is scarce.

- Interdisciplinary Skills: Success requires a blend of data engineering, data science, machine learning engineering, MLOps, cloud architecture, and domain expertise. Finding individuals or teams with this interdisciplinary skillset is challenging.

- Continuous Learning: The pace of innovation in AI, cloud, and data technologies is incredibly rapid, requiring continuous upskilling and learning for technical teams.

- Organizational Structure and Culture: Traditional organizational silos between IT, data science, and business units can hinder effective collaboration and the successful implementation of AI-powered data initiatives.

D. Model Complexity, Interpretability, and MLOps

Managing AI models at scale introduces its own set of complexities.

- Model Explainability (XAI): As AI models become more complex (e.g., deep neural networks), understanding why they make certain predictions (interpretability) becomes challenging. This ‘black box’ problem is critical for trust, debugging, and regulatory compliance.

- Model Drift and Maintenance: AI models trained on historical data can degrade in performance over time if the underlying data patterns change (model drift). Continuously monitoring, retraining, and updating models (MLOps) at hyperscale is a complex operational challenge.

- Bias and Fairness: Detecting, quantifying, and mitigating bias in AI models, especially when dealing with sensitive hyperscale data, requires dedicated tools and ethical considerations.

- Model Versioning and Governance: Managing multiple versions of AI models, their associated data, and ensuring proper governance throughout their lifecycle is a significant MLOps challenge.

E. Integration with Legacy Systems

Many large enterprises operate with legacy systems that are not designed for hyperscale data or AI integration. Integrating modern AI/data platforms with these older systems can be a complex, costly, and time-consuming process.

Best Practices for Implementing AI-Powered Hyperscale Data Solutions

To effectively harness the power of AI for hyperscale data and mitigate inherent challenges, organizations must adopt a strategic, data-centric, and iterative approach, integrating best practices across their entire data and AI lifecycle.

A. Establish a Robust Data Strategy and Governance Framework

Before diving into AI, lay the groundwork with a solid data strategy and governance framework.

- Define Data Ownership: Clearly delineate ownership and responsibility for different data domains.

- Data Quality Standards: Implement rigorous data quality checks, validation rules, and remediation processes from ingestion to consumption.

- Metadata Management: Establish comprehensive metadata management to catalog, discover, and understand hyperscale data assets across the organization.

- Security and Privacy Policies: Design and enforce granular access controls, encryption, anonymization, and compliance policies (e.g., adhering to GDPR, CCPA) for all sensitive data. This provides the trusted foundation for AI.

B. Build a Scalable Cloud-Native Data Platform

Leverage the elasticity and managed services of cloud providers to build a data platform capable of handling hyperscale volumes and velocities.

- Data Lake / Lakehouse Architecture: Adopt a data lake (for raw, unstructured data) or a data lakehouse (combining data lake flexibility with data warehouse structure) as the central repository for all your hyperscale data.

- Streaming Data Pipelines: Implement real-time data ingestion and processing capabilities using services like Kafka, Kinesis, or Pub/Sub for high-velocity data.

- Elastic Compute and Storage: Utilize cloud-native compute (e.g., serverless functions, managed Kubernetes) and storage (e.g., object storage like S3, Azure Blob, GCS) that scale automatically with demand, optimizing costs.

- Managed Database Services: Employ managed relational and NoSQL databases optimized for scale and specific data models, reducing operational overhead.

C. Prioritize MLOps for AI Lifecycle Management

Treat AI models like first-class citizens in your software development lifecycle by adopting MLOps (Machine Learning Operations) practices.

- Automated Model Training and Deployment: Automate the entire process from data preparation and model training to deployment, versioning, and endpoint management.

- Continuous Monitoring: Implement robust monitoring for model performance, data drift, concept drift, and bias in production, triggering alerts for necessary retraining or intervention.

- Model Governance and Explainability: Maintain clear lineage for models and their training data. Invest in tools and techniques for model interpretability (XAI) to understand predictions and build trust.

- Reproducible AI Pipelines: Ensure that AI models can be consistently reproduced, from data lineage to code versions, for auditing and debugging.

D. Invest in Specialized Hardware and Compute Optimization

For compute-intensive AI workloads on hyperscale data, strategic hardware investment and optimization are crucial.

- GPU/TPU Utilization: Leverage Graphics Processing Units (GPUs) or Tensor Processing Units (TPUs) in the cloud or on-premises for accelerating deep learning training and inference.

- Distributed Training Frameworks: Utilize frameworks (e.g., TensorFlow, PyTorch, Horovod) that support distributed training across multiple GPUs or machines to handle massive datasets.

- Edge AI Deployments: For low-latency, real-time inferencing, deploy optimized AI models to edge devices, minimizing data movement and central compute requirements.

- Hardware-Software Co-optimization: Design and optimize AI models with the underlying hardware architecture in mind to maximize performance and efficiency.

E. Build Cross-Functional Data and AI Teams

Break down organizational silos and foster collaboration between various disciplines.

- Data Mesh Principles: Consider adopting Data Mesh principles, where data is treated as a product, owned by cross-functional domain teams who are responsible for its quality, accessibility, and consumption.

- Shared Responsibility: Promote a culture of shared responsibility between data engineers, data scientists, ML engineers, cloud architects, and business stakeholders.

- Continuous Learning and Upskilling: Invest in continuous training programs to keep teams abreast of the latest advancements in AI, data engineering, and cloud technologies.

F. Start with Use Cases and Demonstrate Value Incrementally

Avoid a ‘big bang’ approach. Identify specific, high-value business use cases where AI can deliver clear, measurable impact on hyperscale data.

- Pilot Projects: Begin with small, manageable pilot projects to validate hypotheses, demonstrate ROI, and build internal expertise.

- Iterative Development: Adopt an agile, iterative approach to AI model development and deployment, continuously refining models and solutions based on real-world feedback and performance.

- Focus on Business Outcomes: Always tie AI initiatives to clear business outcomes (e.g., X% reduction in downtime, Y% increase in customer conversion, Z% improvement in fraud detection accuracy).

G. Embrace Responsible AI Practices

Given the ethical implications, integrate responsible AI principles into every stage of your data and AI lifecycle.

- Bias Detection and Mitigation: Implement tools and processes to detect and mitigate bias in training data and AI models.

- Fairness and Transparency: Strive for fairness in AI outcomes and provide mechanisms for explainability (XAI) to ensure transparency and build trust.

- Privacy-Preserving AI: Explore techniques like federated learning and differential privacy for training AI models on sensitive data while preserving privacy.

- Human Oversight: Maintain appropriate human oversight and intervention points in AI-powered decision-making processes, especially for critical applications.

The Future Trajectory of AI and Hyperscale Data

The symbiotic relationship between AI and hyperscale data is set to deepen and expand, driving even more profound transformations across industries and society.

A. Autonomous Data Management and Self-Optimizing Systems

The future will see AI playing an even more central role in managing the entire data lifecycle autonomously.

- Self-Healing Data Pipelines: AI systems will automatically detect and resolve issues in data ingestion, transformation, and storage pipelines.

- Autonomous Data Governance: AI will proactively identify and apply data governance policies, manage access controls, and ensure compliance without manual intervention.

- AI-Driven Database Optimization: Databases will use AI to self-tune, optimize query execution, and manage resource allocation dynamically, becoming largely autonomous.

- Cognitive Data Platforms: Platforms will become ‘cognitive,’ understanding user intent, intelligently discovering data, and proactively suggesting insights or solutions without explicit queries.

B. Greater Explainability and Trust in AI

As AI becomes more pervasive, the demand for explainability and trust will intensify.

- Next-Gen XAI Tools: New tools and frameworks will provide more intuitive and robust explanations for complex AI models, making them more transparent and auditable for business users and regulators.

- Trustworthy AI Frameworks: Industry standards and regulatory guidelines for trustworthy AI will become more mature, promoting fairness, transparency, and accountability in AI deployments at hyperscale.

- Federated Learning and Privacy-Preserving AI: These techniques will become mainstream, enabling AI training on distributed, sensitive hyperscale datasets without centralizing raw data, enhancing privacy and security.

C. AI at the Edge: Real-time, Localized Intelligence

The convergence of AI and hyperscale data will increasingly occur at the network’s edge.

- Edge-Native AI Models: AI models specifically designed and optimized to run efficiently on resource-constrained edge devices for real-time inference and decision-making.

- Decentralized Data Processing: More data processing and analytics will happen locally on edge devices, reducing reliance on cloud connectivity and enabling ultra-low latency applications (e.g., autonomous vehicles, smart factories).

- Edge-to-Cloud Continuum: A seamless continuum between edge, fog, and cloud computing will emerge, allowing intelligent data processing to occur at the most optimal location based on latency, bandwidth, and computational requirements.

D. Generative AI for Data Augmentation and Synthesis

Generative AI will move beyond just creating text or images to playing a crucial role in data itself.

- Synthetic Data Generation: AI will generate realistic synthetic data for training models, especially useful where real-world data is scarce, sensitive, or difficult to obtain, addressing privacy concerns.

- Data Augmentation for Training: Generative AI can augment existing hyperscale datasets, creating variations and expanding training data for more robust and generalized AI models.

- Automated Data Storytelling: AI will not only analyze data but also generate compelling narratives and visualizations, making complex insights from hyperscale data more accessible and impactful for diverse audiences.

E. AI for Sustainable Computing

AI will be leveraged to optimize the environmental impact of processing hyperscale data.

- Green Data Center Optimization: AI will intelligently manage power consumption, cooling systems, and server workloads in data centers to minimize energy usage and carbon footprint.

- Resource Allocation Efficiency: AI will dynamically allocate compute, storage, and network resources in the cloud for hyperscale workloads, ensuring optimal utilization and reducing wasted energy.

- E-Waste Reduction: AI-powered predictive maintenance for hardware will extend the lifespan of digital infrastructure components, contributing to a reduction in e-waste.

Conclusion

The symbiotic relationship between Artificial Intelligence and hyperscale data is not just a technological trend; it is the definitive force driving the next era of global economic growth and societal transformation. As we navigate a world awash in unprecedented volumes, velocities, and varieties of information, AI emerges as the indispensable engine that empowers organizations to not just manage, but to truly extract profound value from this digital deluge. From automating laborious data preparation and optimizing complex storage systems to fueling cutting-edge analytics and training the next generation of intelligent models, AI fundamentally redefines what’s possible with data.

While the journey to fully leverage AI-powered hyperscale data is fraught with challenges—including data quality, immense infrastructure demands, and critical talent gaps—the strategic advantages are too compelling to ignore. By embracing robust data governance, building scalable cloud-native platforms, championing MLOps, and fostering cross-functional collaboration, organizations can effectively harness this power. The future promises even more autonomous data management, transparent AI, localized intelligence at the edge, and sustainable computing practices. Ultimately, AI doesn’t just process hyperscale data; it unlocks its deepest secrets, transforms raw information into actionable wisdom, and provides the strategic foresight necessary to thrive in an increasingly data-driven world, truly unleashing unprecedented efficiency and unveiling boundless potential.